Open WebUI Tools are Python scripts provided to an LLM during a request, allowing it to perform specific actions and gain additional context. For effective use, your chosen LLM typically needs to support function calling. These tools unlock a wide range of chat capabilities, such as web search, web scraping, and API interactions within the conversation.

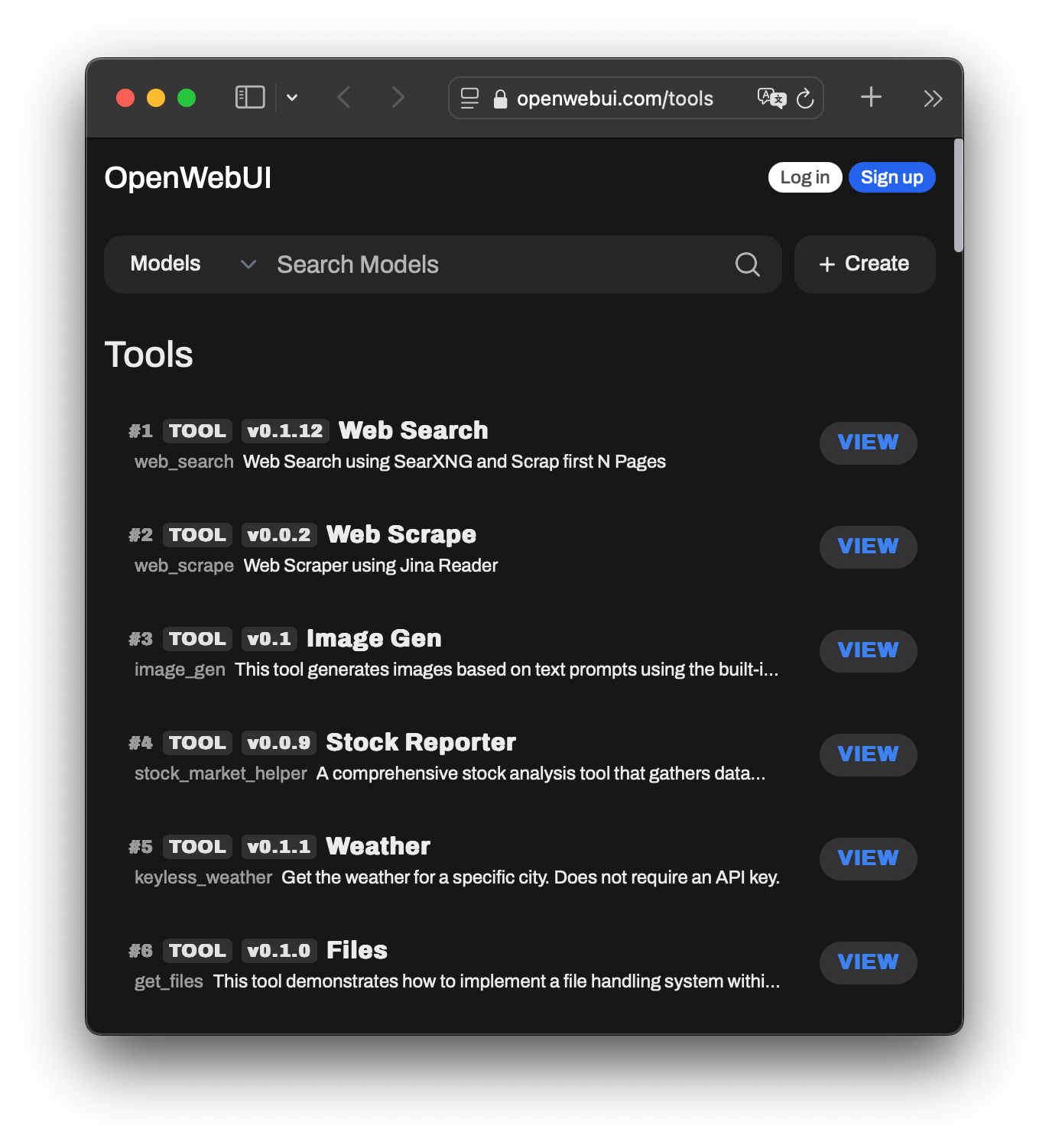

You can explore and easily import various tools available on the Community Website into your Open WebUI instance.

How to Use Open WebUI Tools

Once installed, Tools can be assigned to any LLM that supports function calling. To enable a Tool for a specific model, navigate to Workspace > Models, select the desired model, and click the pencil icon to edit its settings. Scroll down to the Tools section, check the Tools you want to activate, and click save.

After Tools are enabled, you can access them during a chat by clicking the “+” icon. Keep in mind, enabling a Tool provides the LLM with the option to call it but does not force its use.

For automated Tool selection, consider using the AutoTool Filter available on the community site: AutoTool Filter. You’ll still need to follow the steps above to enable Tools for each model.

How to Install Tools

The installation process for Tools is straightforward, with two available methods:

1. Manual Download and Import

- Visit Community Tools.

- Select the Tool you wish to import.

- Click the blue “Get” button, then choose Download as JSON export.

- Upload the downloaded Tool into OpenWebUI by going to Workspace > Tools and clicking Import Tools.

2. Import via OpenWebUI URL

- Visit Community Tools.

- Select the Tool and click the blue Get button.

- Enter your OpenWebUI instance’s IP address and click Import to WebUI, which will automatically open your instance and import the Tool.

Note: Always ensure you understand the Tools you import, especially when they come from unknown sources. Running untrusted code can be risky.

What Can Tools Do?

Tools expand the capabilities of interactive chats by enabling features like:

- Web Search: Conduct live searches for real-time information.

- Image Generation: Generate images based on user prompts.

- External Voice Synthesis: Integrate external voice services (e.g., ElevenLabs) to generate audio from LLM outputs via API calls.

Important Tool Components

Valves and UserValves (Optional, but Highly Encouraged)

Valves and UserValves are used to allow users to input dynamic details like API keys or configuration options. These create fillable fields or boolean switches in the GUI for a given Tool.

- Valves: Configurable by admins only.

- UserValves: Configurable by any user.

These are essential for giving users control over custom configurations within a tool, enhancing flexibility.

Example:

# Define Valves

class Valves(BaseModel):

priority: int = Field(default=0, description="Priority level for filter operations.")

test_valve: int = Field(default=4, description="A valve controlling a numerical value")

# Define UserValves

class UserValves(BaseModel):

test_user_valve: bool = Field(default=False, description="A user-controlled on/off switch")

def __init__(self):

self.valves = self.Valves()Event Emitters

Event Emitters are designed to add additional information to the chat interface, similar to Filter Outlets. Unlike filters, they cannot strip information and can be activated at any stage of a Tool. There are two main types of Event Emitters:

- Status: Adds status messages during processing steps, appearing above the message content. Useful for long-running or complex operations, it informs users about real-time progress.

Example:

await __event_emitter__(

{

"type": "status",

"data": {"description": "Processing task...", "done": False}, # Status in progress

}

)

# More logic here

await __event_emitter__(

{

"type": "status",

"data": {"description": "Task completed", "done": True}, # Status completed

}

)- Message: Appends messages, embeds images, or renders web pages before, during, or after the LLM response.

Example:

await __event_emitter__(

{

"type": "message",

"data": {"content": "This message will be added to the chat."}

}

)These components are key to building interactive and responsive tools, providing both real-time feedback and customized configurations.