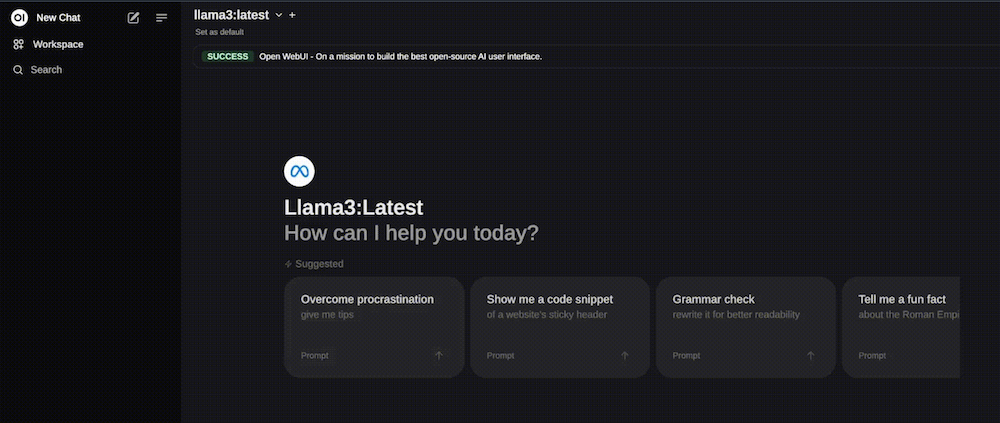

Open WebUI is an extensible, feature-rich, and user-friendly self-hosted WebUI designed to operate entirely offline. It supports various LLM runners, including Ollama and OpenAI-compatible APIs.

Open WebUI offers a range of powerful features designed for seamless integration and efficient performance. Setting up is effortless with Docker or Kubernetes, making it accessible across various platforms, and supporting both Ollama and CUDA-tagged images. OpenAI API compatibility allows integration with numerous platforms like LMStudio and GroqCloud, offering versatile conversational models.

The platform supports custom pipelines and plugin integration, enabling users to incorporate Python libraries and advanced features like function calling, rate limiting, and live translation. Its native Python function-calling tool allows for seamless interaction with large language models (LLMs), enhancing the user experience with custom code.

Open WebUI also integrates Retrieval Augmented Generation (RAG) for document interaction and web search capabilities, allowing users to load and retrieve documents or search the web within chat. Additionally, image generation and multi-model support are available, making the platform versatile for various use cases, from content generation to multilingual communication.

We are non-official community of AI enthusiasts who are passionate with Open Source tools. On This website we will explore Open WebUI capabilities and share our expertise.